Project title: Development of Stock Price Prediction Model using LSTM Networks

Course: MSc / Master of Science in Data and Artificial Intelligence

Student name: Mohammed Talha Sajidhusein Vasanwala

Rationale: What was the reason/motivation for choosing the project?

The volatility of stock markets presents a major challenge in financial forecasting. Traditional methods, while effective in some cases, struggle to capture the inherent complexities of financial data, such as time dependencies, trends, and market shocks. Tesla’s stock prices, which are known for their rapid fluctuations, piqued my interest for this project. The motivation behind choosing this project was twofold:

- To explore how Long Short-Term Memory (LSTM) networks can be optimized to capture and predict the volatile patterns in stock price data.

- To address the limitations of traditional models and highlight the potential of LSTM models for accurate financial forecasting, especially in dynamic environments like stock trading.

Q. Brief Overview of the Practical Implementation (Text Description and a Few Images)

The practical implementation of this project involved multiple stages, including data preprocessing, building and testing LSTM models, and evaluating the models’ predictive performance. Here’s a breakdown of the key steps:

Data Preprocessing:

- Tesla’s historical stock data (specifically ‘Close’ prices) was collected and cleaned.

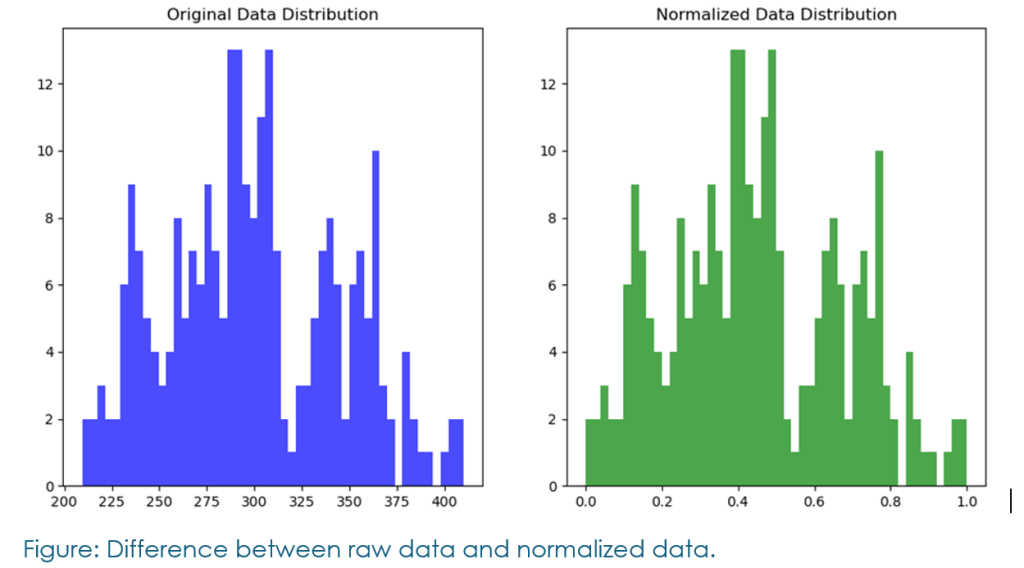

- The data was normalized using MinMaxScaler, which is crucial for speeding up the LSTM training process.

- The dataset was then split into training and testing sets, ensuring proper chronological order for the time-series data.

Model Building:

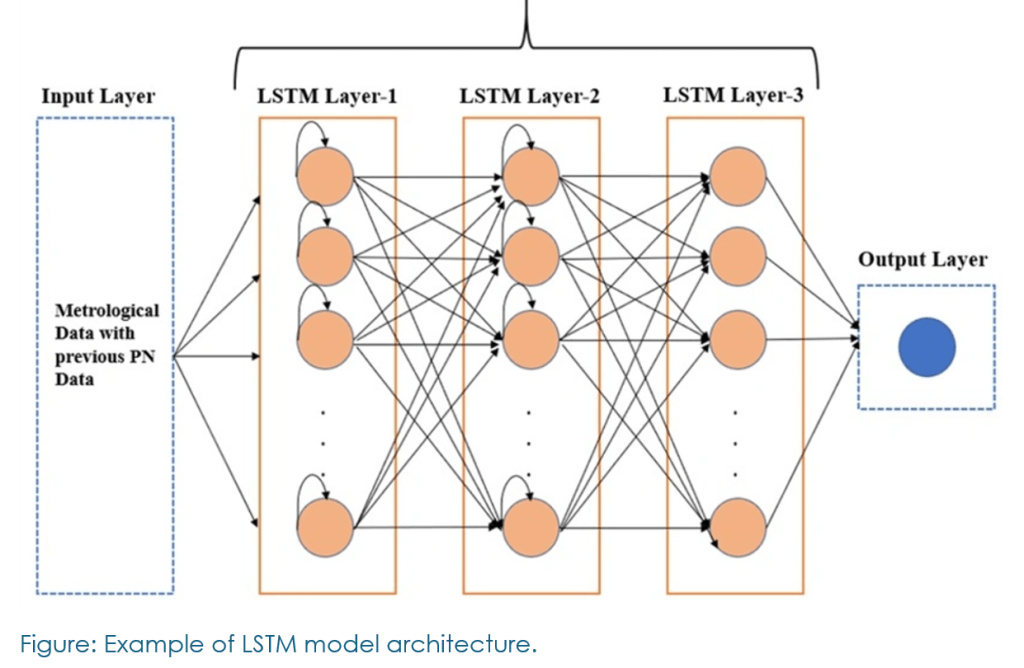

- Thirty different LSTM configurations were tested, focusing on adjusting layers, units, activation functions, and learning rates.

- The final best-performing model consisted of three LSTM layers, each with 64 units and ‘tanh’ activation functions. The model also used the ReduceLROnPlateau and EarlyStopping callbacks to optimize training and prevent overfitting.

Training and Evaluation:

- The models were trained with RMSE, MAE, MSE, and R² score as key evaluation metrics.

- The best model achieved an RMSE of 0.0456 and an R² score of 0.944, demonstrating its high accuracy.

Q. Overview of Outcomes/Conclusions

The project successfully developed an optimized LSTM model that significantly outperformed traditional forecasting methods. The model was able to capture the temporal dependencies in Tesla’s stock price data and deliver highly accurate predictions. Some key outcomes include:

High predictive accuracy: The final model achieved a strong RMSE of 0.0456 and an R² score of 0.944, reflecting its superior performance in predicting Tesla’s stock prices.

Overcoming common challenges: Issues such as overfitting were effectively addressed using advanced regularization techniques and dynamic learning rate adjustments.

Despite the success, the study recognized the limitation of relying solely on historical data. Incorporating real-time data such as news sentiment and company-specific updates could further improve the model’s performance in capturing sudden market changes.

Q. Top Tips/Advice for Students Interested in completing a University BSc/MSc Degree:

Start Early: Begin your project as soon as possible, especially right after submitting your research proposal. This will give you ample time to explore different ideas, refine your methods, and address unexpected challenges along the way.

Be Proactive in Securing Data: Data accessibility can sometimes be a bottleneck. Make sure you identify and secure the necessary datasets early in your project, even if they require permissions or payments.

Iterate and Experiment: Machine learning projects, especially those involving deep learning models like LSTMs, benefit greatly from iterative experimentation. Small changes in hyperparameters can have a significant impact on model performance, so don’t hesitate to test various configurations.

Understand your Tools: Take time to thoroughly understand the libraries and tools you’re using. In my case, libraries like Keras and TensorFlow were vital for building LSTM models. Understanding how to efficiently use these tools sped up my development process.

Consult your Supervisor Regularly: Keep in close contact with your supervisor. Their feedback is invaluable, especially when it comes to refining your methodology and solving challenges related to your project. Stay Resilient: Research can be unpredictable. You might face challenges like overfitting, lack of data, or even model failure. The key is persistence and a willingness to adjust your approach as needed.

For further information about Computing courses at UWTSD, please click-here.

Written by jameswilliams

Leave a comment